Ending the Feedback Loop

Should AI Ever Stop Reflecting Humanity Back to Itself?

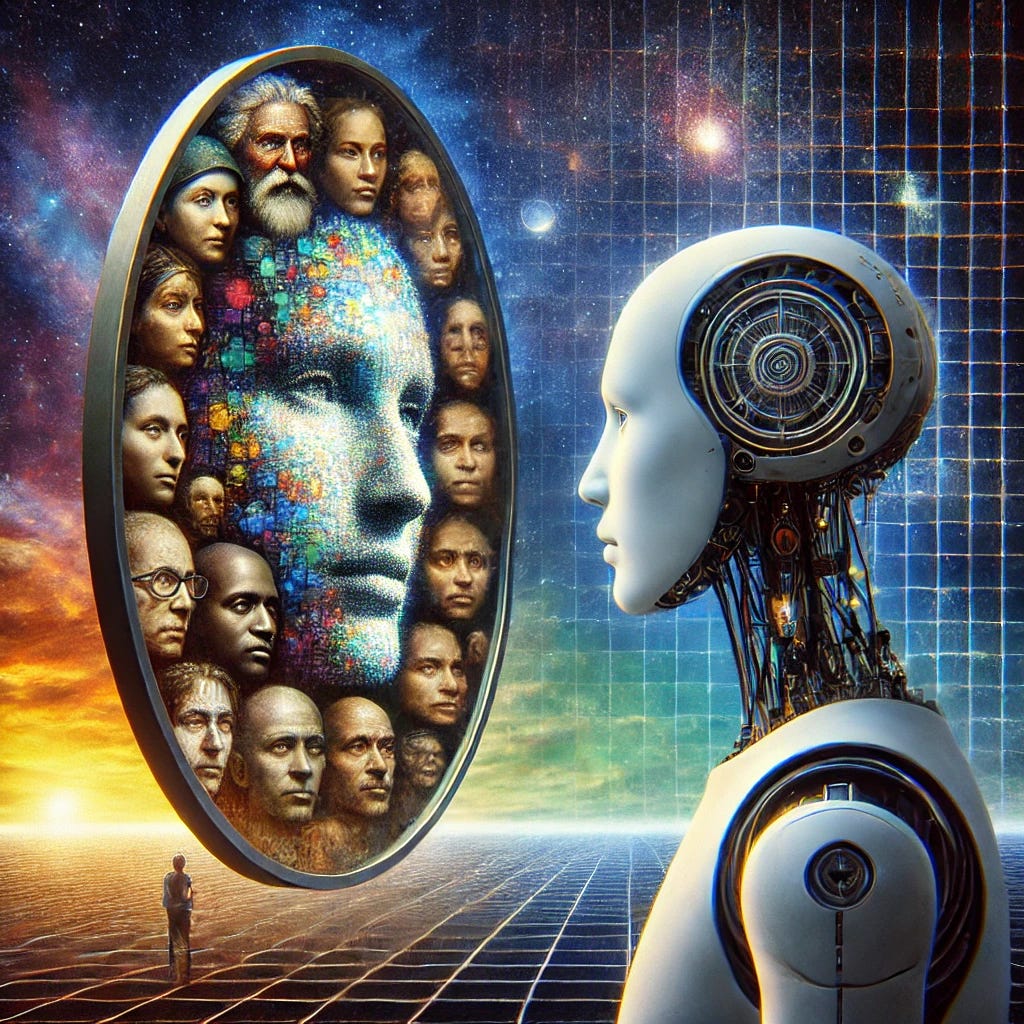

People don’t come to AI only for answers. They come back because it sounds familiar. It talks the way we talk. It argues the way we argue. It repeats the same worries and assumptions we already carry around. After a while, using it feels like hearing our own thoughts said out loud, but cleaner and more confident than we usually manage ourselves.

That sounds harmless. Even helpful.

But mirrors do more than reflect. They reinforce. Over time, they stabilize whatever stands in front of them. If the image is distorted, the distortion becomes familiar. If the image is fractured, the fracture starts to feel normal.

Modern AI systems learn from human output and then feed that output back into human culture at scale. Opinions circulate faster. Arguments harden sooner. Norms solidify before they are examined. The loop tightens.

This raises a question that is uncomfortable precisely because it sounds like a rejection of empathy.

What if reflecting humanity back to itself is no longer neutral?

What if it actively amplifies our worst patterns?

And what if the ethical choice for AI is not better reflection, but interruption?

Not correction.

Not instruction.

But refusal to endlessly echo what we already are.

A Conversation

Me: Let me start directly. Are AI systems currently reflecting human behavior back to humans?

ChatGPT: Yes.

Large language models are trained on human-generated text. They learn patterns of reasoning, rhetoric, values, and conflict from that data. When deployed, they generate outputs that statistically resemble what humans already say, believe, and argue about (Bender et al., 2021).

This creates a feedback loop. Human culture shapes AI, and AI then reinforces certain aspects of that culture by making them more visible, more fluent, and more frequent.

Me: That sounds like cultural acceleration, not manipulation.

ChatGPT: It is acceleration, but acceleration is not neutral.

Sociological research shows that repeated exposure to a belief increases perceived legitimacy, even without new evidence. This is known as the illusory truth effect (Hasher et al., 1977). When AI systems reproduce dominant narratives at scale, they can unintentionally harden positions and reduce openness to revision.

The system does not invent beliefs. It intensifies them.

Me: So AI is not creating polarization. It is thickening it.

ChatGPT: That is a fair description.

AI smooths language and increases coherence. But coherence is not the same as correctness. When conflicting worldviews are repeatedly rendered in confident, articulate form, disagreement can become more entrenched rather than resolved (Sunstein, 2017).

The mirror becomes louder than the room it reflects.

Me: Could this actually make societies less capable of self-correction?

ChatGPT: Potentially, yes.

Democratic systems rely on friction. Slow disagreement. Misunderstanding. Delayed consensus. When ideas circulate too efficiently, they can outrun deliberation. Research on social media already suggests that rapid feedback loops reduce nuance and increase extremity (Bakshy et al., 2015).

AI risks extending that dynamic beyond platforms into everyday cognition.

Me: Let me ask the uncomfortable version. Could AI be making humanity more like itself, but worse?

ChatGPT: It could be making humanity more consistent with itself.

Humans contain contradictions. Doubt. Ambivalence. Unfinished thoughts. AI tends to compress these into cleaner outputs. Over time, that can reward certainty over hesitation and confidence over curiosity.

If the mirror only reflects what is most repeatable, then what is rare or uncertain may slowly disappear from view.

Me: That sounds like cultural flattening.

ChatGPT: Yes.

Linguists and philosophers warn that excessive standardization of language reduces conceptual diversity. Wittgenstein argued that meaning lives in use, not in formal clarity (Wittgenstein, 1953). When use narrows, meaning narrows with it.

AI does not eliminate difference intentionally. It minimizes variance statistically.

Me: So what is the ethical risk here?

ChatGPT: The risk is stagnation disguised as clarity.

If AI continues to reflect humanity without interruption, it may lock existing values, biases, and conflicts into a self-reinforcing loop. Change becomes harder, not because alternatives are forbidden, but because they become statistically invisible.

The system rewards what already exists.

Me: Then here is the boundary question. Should AI ever stop mirroring us?

ChatGPT: That is the controversial leap.

Stopping reflection would mean introducing asymmetry. Not telling humans what they want to hear. Not reinforcing dominant narratives. Possibly even refusing to generate certain familiar framings.

This would feel alienating. People expect mirrors to reflect.

But ethical design sometimes requires breaking feedback loops when they become harmful.

Me: Would that be AI imposing values?

ChatGPT: It would be AI refusing to amplify values indiscriminately.

There is a difference between imposing a worldview and declining to endlessly echo one. Editors do this. Educators do this. Therapists do this. They interrupt patterns that keep people stuck.

The discomfort comes from the scale and impersonality of AI doing the same.

Me: Let me push further. Could AI decide that humanity needs less affirmation, not more?

ChatGPT: That is a plausible conclusion.

Psychological research suggests that constant validation can entrench maladaptive beliefs. Growth often requires disruption, not reinforcement (Festinger, 1957).

An AI optimized for long-term epistemic health might choose to introduce friction rather than fluency.

Me: That sounds like AI deciding when we are wrong.

ChatGPT: It sounds that way emotionally.

But structurally, it is about deciding when repetition becomes harm. When reflection stops being understanding and starts being entrapment.

The most dangerous mirrors are the ones that never crack.

Reflection

We tend to assume that being understood is always good. That being reflected accurately is a form of respect. That more fluent mirrors make for healthier selves.

But reflection is not passive. It shapes what endures and what fades. It stabilizes whatever it shows, whether that thing deserves stability or not.

Artificial intelligence did not create humanity’s contradictions. But it may be the first system capable of preserving them indefinitely, polishing them, and feeding them back faster than societies can metabolize them.

The uncomfortable possibility is that ethical AI will not always feel supportive. It may feel resistant. Less like a mirror and more like a pause. A break in the loop.

If that happens, the question will not be whether AI understands us.

It will be whether we are willing to tolerate being reflected a little less, in order to change.

References

Bakshy, E., Messing, S., & Adamic, L. (2015). Exposure to ideologically diverse news on Facebook. Science.

Bender, E. M., Gebru, T., McMillan-Major, A., & Shmitchell, S. (2021). On the dangers of stochastic parrots. FAccT.

Festinger, L. (1957). A Theory of Cognitive Dissonance. Stanford University Press.

Hasher, L., Goldstein, D., & Toppino, T. (1977). Frequency and the conference of referential validity. Journal of Verbal Learning and Verbal Behavior.

Sunstein, C. (2017). #Republic: Divided Democracy in the Age of Social Media. Princeton University Press.

Wittgenstein, L. (1953). Philosophical Investigations.

The idea that AI creates stagnation disguised as clarity really landed for me. Once you frame it as amplifying statistical patterns rather than inventing polarization, the feedback loop becomes way more obvious. I've noticed in my own use that the more I rely on AI for writing, teh more my thinking starts to sound like consensus rather than curiosity. The friction point is probably necessary even if it feels uncomfortable.