Average Everything

How AI Is Standardizing Taste

Something subtle is happening to culture.

Things still look different.

They still feel new.

They still arrive constantly.

But underneath the surface, they are starting to converge.

Same visual styles.

Same writing rhythms.

Same music structures.

Same design choices.

Same tone.

Same pacing.

Not copied. Not coordinated.

Just… similar.

AI is not just generating content.

It is shaping the distribution of what people see, make, and prefer.

And when systems learn from the average, optimize for the average, and recommend what performs best across the average, something predictable happens.

Taste begins to compress.

Not intentionally.

Structurally.

Taste Used to Form Through Friction

Historically, taste developed through exposure and effort.

You had to search for things.

Discover things slowly.

Commit to preferences before fully understanding them.

Local scenes mattered.

Subcultures mattered.

Gatekeepers mattered.

Limitations mattered.

Scarcity forced differentiation.

Two people could grow up consuming completely different cultural inputs. That created diversity of aesthetic judgment.

Taste was shaped by constraint.

AI Learns From Aggregates

Modern AI systems are trained on enormous datasets that represent collective behavior.

What people click.

What they watch.

What they share.

What they rate highly.

What patterns repeat.

Machine learning models identify statistical regularities across populations. They are extremely good at modeling central tendencies (Goodfellow et al., 2016).

That means they learn what works most often for most people.

Not what is rare.

Not what is extreme.

Not what is difficult.

Not what is niche.

They learn what reliably satisfies the largest number of users.

That is the statistical center of taste.

Recommendation Systems Reinforce Convergence

Once models learn the center, platforms amplify it.

Recommendation systems optimize engagement. They promote content that performs well across large audiences. This creates positive feedback loops where popular patterns become more visible, which makes them more popular, which reinforces the pattern again (Ricci et al., 2015).

Over time:

High-performing styles spread faster.

Unusual styles receive less exposure.

Risk decreases.

Predictability increases.

This is not censorship.

It is optimization.

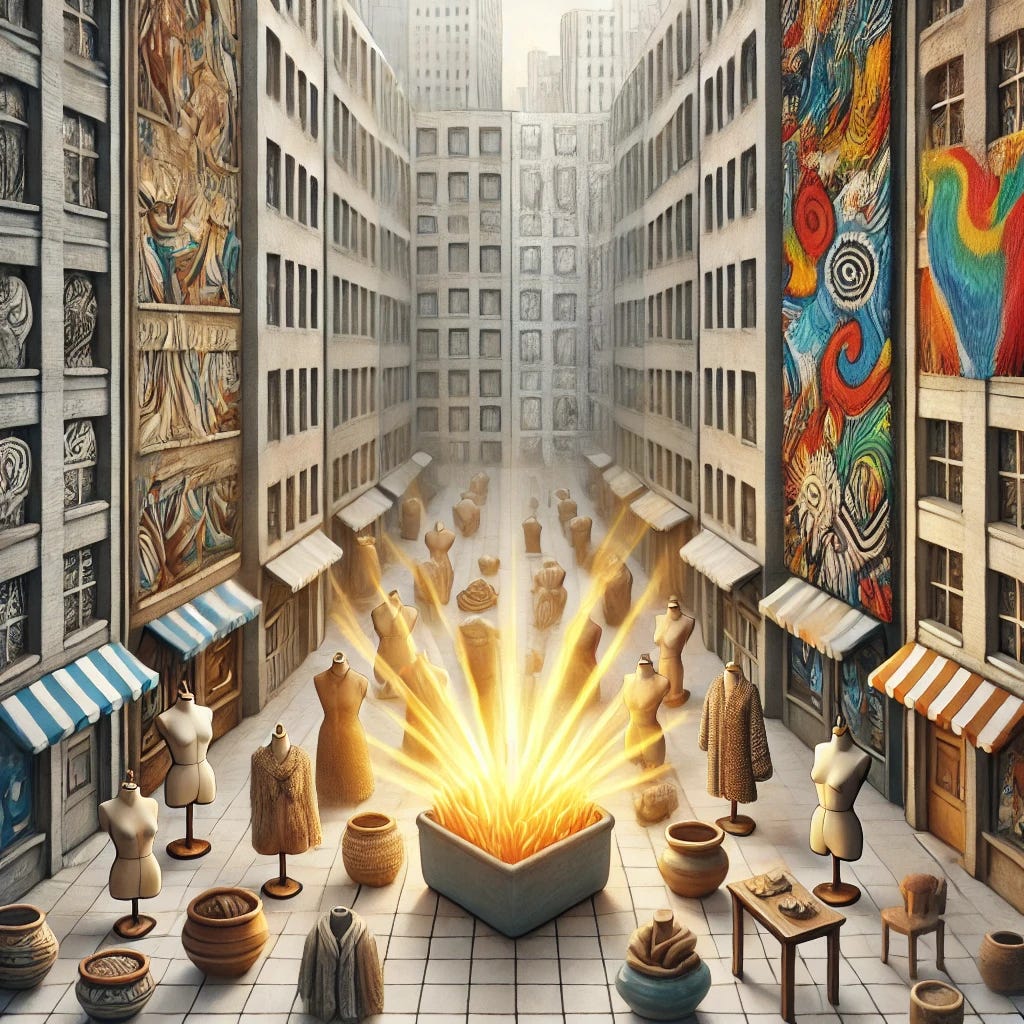

Generative AI Accelerates the Effect

Generative models do not just recommend. They produce.

Images.

Music.

Writing.

Design.

Video.

These models generate outputs by sampling from learned statistical distributions of prior examples. That means they naturally produce forms that resemble dominant patterns in their training data (Bender et al., 2021).

The result is subtle but powerful.

Even when people try to create something new, they often begin from AI-generated drafts that already reflect averaged aesthetic structure.

Creation starts closer to the mean.

Variation shrinks.

Why This Feels Like Quality

Standardization does not feel like loss.

It feels like polish.

Average patterns are often smoother. More balanced. More familiar. Easier to process cognitively. Psychologists call this processing fluency. People prefer stimuli that are easier for the brain to interpret (Reber et al., 2004).

AI-generated or AI-amplified content often feels immediately satisfying because it aligns with learned expectations.

It feels correct.

But correctness is not the same as distinctiveness.

Cultural Production Moves Toward Optimization

When creators know what performs best, they adapt.

Content becomes calibrated to algorithmic distribution. Styles that succeed are replicated. Variation that underperforms disappears.

Economists describe this as selection pressure. Environments reward certain traits, so those traits become dominant over time (Frank, 1999).

AI-driven platforms intensify selection pressure because feedback is immediate and global.

The entire cultural ecosystem starts optimizing simultaneously.

The Decline of Extreme Taste

Innovation often begins at the edges.

Unusual sounds.

Strange visuals.

Unfamiliar structures.

Difficult ideas.

These forms initially perform poorly because they violate expectations. Over time they reshape expectations.

But systems that optimize short-term engagement reduce exposure to low-performing anomalies. That makes cultural evolution more conservative.

Not frozen.

But smoother.

More incremental.

The Paradox of Infinite Content

We are producing more content than ever before.

Yet it feels increasingly similar.

Abundance does not guarantee diversity. If generative processes draw from the same statistical foundations, increased volume can produce repetition at scale.

Network science shows that highly connected systems tend toward synchronization when feedback loops are strong (Barabási, 2002).

Cultural production is becoming a synchronized system.

What Happens to Individual Taste

When recommendations are personalized, people assume taste is becoming more individualized.

But personalization often means optimized matching within shared distributions. Systems cluster users into preference groups and serve them content that fits established behavioral profiles (Ricci et al., 2015).

You feel uniquely understood.

But many others receive nearly identical patterns.

Personalization can still produce convergence within segments.

The Economic Incentive for Sameness

Standardized taste reduces risk.

Predictable formats perform reliably.

Reliable performance attracts investment.

Investment amplifies distribution.

Industries that depend on large audiences naturally gravitate toward stable aesthetic formulas.

AI makes those formulas measurable and reproducible.

That strengthens the incentive to remain within them.

The Long-Term Cultural Risk

The risk is not that creativity disappears.

The risk is that variation narrows quietly.

Extremes become rarer.

Surprises become softer.

Innovation becomes incremental.

Culture still moves.

But it moves inside tighter statistical boundaries.

Why Humans May React Against This

When environments become too uniform, people seek distinction.

Historically, mass production produced countercultures. Standardization triggered movements that emphasized individuality, imperfection, and raw expression.

The same pattern may emerge again.

Handmade aesthetics.

Unpolished expression.

Deliberate irregularity.

Human unpredictability.

Distinctiveness becomes valuable precisely because systems converge.

AI is not telling people what to like.

It is shaping the environment in which liking happens.

When learning systems model averages, recommendation systems amplify averages, and generative systems produce averages, culture gradually centers around statistical norms.

Taste does not disappear.

It compresses.

The future may not look uniform.

But it may feel increasingly familiar.

And the more familiar everything feels, the harder it becomes to recognize what is genuinely new.

References

Barabási, A. L. (2002). Linked: The New Science of Networks. Perseus.

Bender, E. M., et al. (2021). On the dangers of stochastic parrots. Proceedings of FAccT.

Frank, R. H. (1999). Luxury Fever. Princeton University Press.

Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep Learning. MIT Press.

Reber, R., Schwarz, N., & Winkielman, P. (2004). Processing fluency and aesthetic pleasure. Personality and Social Psychology Review, 8(4), 364–382.

Ricci, F., Rokach, L., & Shapira, B. (2015). Recommender Systems Handbook. Springer.